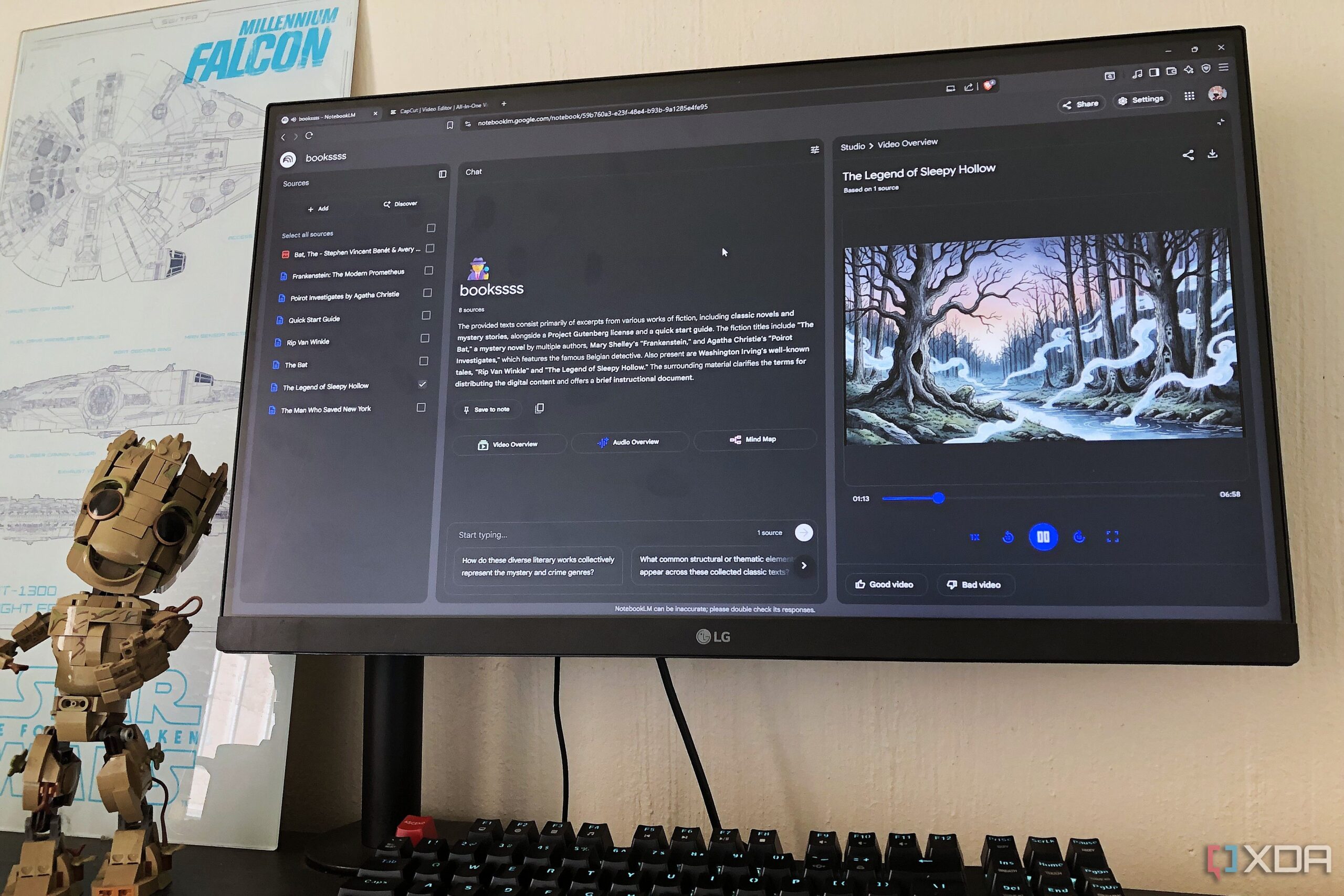

URGENT UPDATE: MIT researchers have just announced a groundbreaking approach that enables large language models (LLMs) to permanently absorb new information, revolutionizing how AI can learn and adapt. This development is set to change the landscape of artificial intelligence, making it more dynamic and capable of evolving with user interactions.

In a significant leap forward, the new technique, called SEAL (self-adapting LLMs), allows these models to generate their own study materials from user input, akin to how students create notes before exams. This method not only enhances the model’s ability to retain knowledge but also boosts its performance in question-answering tasks by nearly 15% and increases skill-learning success rates by over 50%.

The research, which will be presented at the upcoming Conference on Neural Information Processing Systems, positions MIT at the forefront of AI innovation. Co-lead author Jyothish Pari, an MIT graduate student, emphasized the urgency of this advancement, stating, “Just like humans, complex AI systems can’t remain static. We want to create a model that can keep improving itself.”

Traditionally, once an LLM is deployed, its parameters are fixed and incapable of being updated with new knowledge. However, this new framework allows the model to rewrite information and assess which version leads to the best performance. This process mimics human learning, where trial and error are critical for mastering new concepts.

The SEAL framework leverages the in-context learning capabilities of existing LLMs, enabling them to generate synthetic data based on user inputs. The model then applies a reinforcement learning method to identify the most effective self-edits. Each successful adaptation is memorized, allowing the AI to internalize the information effectively.

Co-lead author Adam Zweiger, an undergraduate at MIT, stated, “Our hope is that the model will learn to create the best study sheets, leading to a better overall performance.” This adaptability is crucial, especially in environments where AI must respond to ever-changing information.

While the results are promising, challenges remain. Researchers acknowledge the issue of catastrophic forgetting—where a model’s performance on earlier tasks declines as it learns new information. Future work will focus on addressing this limitation and potentially allowing multiple LLMs to train and learn from each other.

The implications of this research extend far beyond academic settings. The ability of AI systems to learn and adapt in real-time could significantly enhance their effectiveness in various applications, including scientific research and complex problem-solving scenarios. “One of the key barriers to meaningful AI research is the inability to update based on interactions with new information,” Zweiger added, highlighting the potential for these self-adapting models to contribute to advancements in science and technology.

This innovative approach is supported by organizations including the U.S. Army Research Office and the U.S. Air Force AI Accelerator, indicating strong interest from defense sectors in the evolution of AI capabilities.

As researchers continue to refine this groundbreaking technique, the future of AI looks increasingly promising. Expect to see more updates as this story develops and MIT’s findings make waves in the tech community and beyond. Share this article to spread the word about this exciting breakthrough!