The race for artificial intelligence (AI) dominance is intensifying, driven by a belief in its inevitable benefits and the fear of falling behind global competitors, particularly China. American tech leaders such as Sam Altman and Elon Musk advocate for a future shaped by AI, while concerns about safety and societal impact are often overshadowed by the urgency to innovate. This dynamic raises critical questions about the direction of technological advancement and its alignment with human values.

Techno-Utopian Visions of AI

In a recent blog post, Sam Altman, CEO of OpenAI, articulated a vision of a future where AI surpasses human intelligence, potentially revolutionizing industries. The possibilities are vast: robots could manage entire supply chains, construct infrastructure, and even create more robots. Tech entrepreneurs like Marc Andreessen envision a world where AI solves pressing issues, such as disease and environmental collapse, ushering in an era of abundance. Yet, this utopian outlook often neglects the potential consequences of such sweeping changes.

The ambition behind these advancements resembles the early 20th-century Technocracy movement, which sought to place scientific governance at the forefront of society. Today, the “Techno-Optimist Manifesto,” penned by Marc Andreessen, echoes similar sentiments, suggesting that all societal problems can be resolved through technological innovation. However, this perspective faces backlash due to growing concerns over the unchecked power of technology companies and their influence on personal data and public trust.

Disruption and Societal Implications

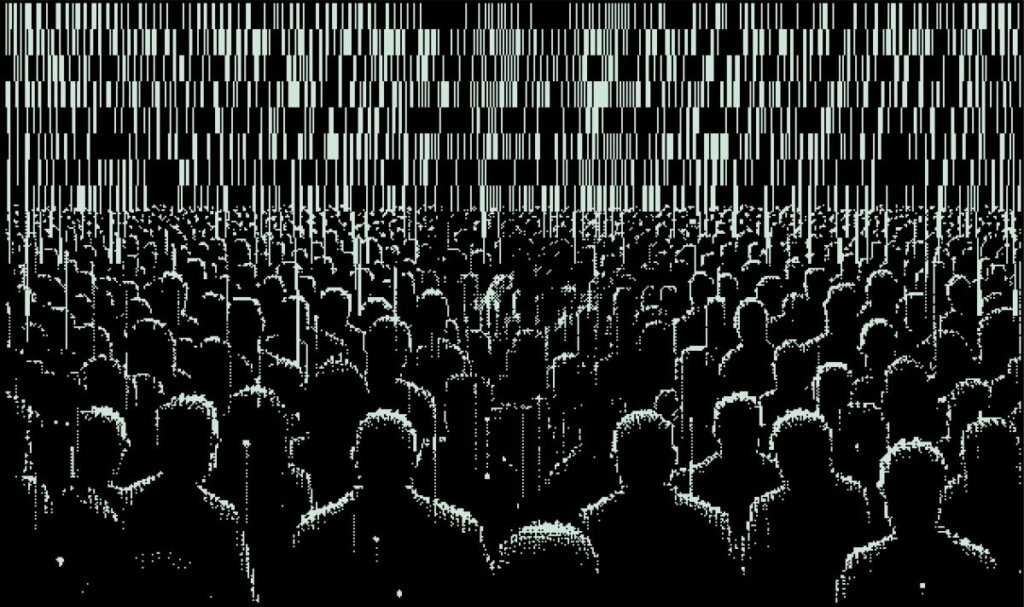

The rapid evolution of AI poses significant challenges, particularly in the workforce. Historical transitions, such as the Industrial Revolution, provided time for societies to adapt to job displacement. In contrast, AI threatens to eliminate a substantial portion of white-collar jobs within a few years, with estimates suggesting that as much as 50% of entry-level positions could be replaced. This shift could exacerbate economic inequality as fewer high-wage roles are available, leading to a hollowing out of the professional class.

Additionally, the integration of AI into critical sectors like healthcare and finance raises concerns about accountability and safety. Failures in AI systems can have dire consequences, yet many developers acknowledge their limited understanding of complex AI behaviors. The risk of “hallucinations,” where AI generates incorrect or fabricated information, is prevalent, making it clear that robust oversight is essential.

As the U.S. navigates its place in the AI landscape, it must consider not only the speed of deployment but also the ethical implications of its technology. This urgency is underscored by China’s rapid advancements in AI applications, particularly in surveillance and governance. The United States faces the challenge of leading the AI race by prioritizing safety and transparency rather than merely racing for dominance.

The emergence of a “technopolar” world, as described by political scientist Ian Bremmer, positions major tech companies as influential actors in global politics, sometimes rivaling the authority of nation-states. The unregulated power of these companies raises questions about accountability in a system where corporate interests may overshadow public welfare.

The historical analogy of the British East India Company serves as a cautionary tale, illustrating how entities with immense power can operate independently of national governance. As the U.S. grapples with the growing influence of Big Tech, there is an urgent need to establish frameworks for accountability and regulation.

Despite the promise of AI to improve human life, the lack of cohesive global governance remains a significant barrier. Initiatives like the Bletchley Declaration in 2023 aimed to foster collaboration among major AI powers, yet subsequent discussions have faltered. The reluctance of leaders like J. D. Vance to embrace stringent regulations further complicates efforts to ensure safe and ethical AI development.

As the dialogue surrounding AI continues to evolve, it is imperative to address fundamental questions about its potential to replace human agency. Even proponents of AI, such as Elon Musk, have expressed concerns about the risks, predicting a significant chance of catastrophic outcomes. The pace of AI development, fueled by financial investments that reached $471 billion from 2013 to 2024, demands a balanced approach that integrates ethical considerations into technology.

The future of AI is unfolding rapidly, yet without adequate preparation and oversight, the consequences could be profound. As Dario Amodei, CEO of Anthropic, cautioned, the objective should not only be to accelerate but also to steer AI development in a direction that aligns with human values and societal well-being. The challenge lies in creating a future where technology serves humanity, rather than dictating its course.