A recent integration of local and cloud-based AI tools has significantly enhanced productivity in digital research workflows. By combining the strengths of the local Large Language Model (LLM) within LM Studio and the research capabilities of NotebookLM, users are experiencing remarkable efficiency gains in their projects.

Enhancing Research Workflows

The challenge many researchers face is balancing deep context with control over their data. NotebookLM excels at organizing research, allowing users to generate insights grounded in their documents. However, it often lacks the speed and customization options provided by local LLMs. This gap prompted an innovative solution: utilizing both tools in tandem.

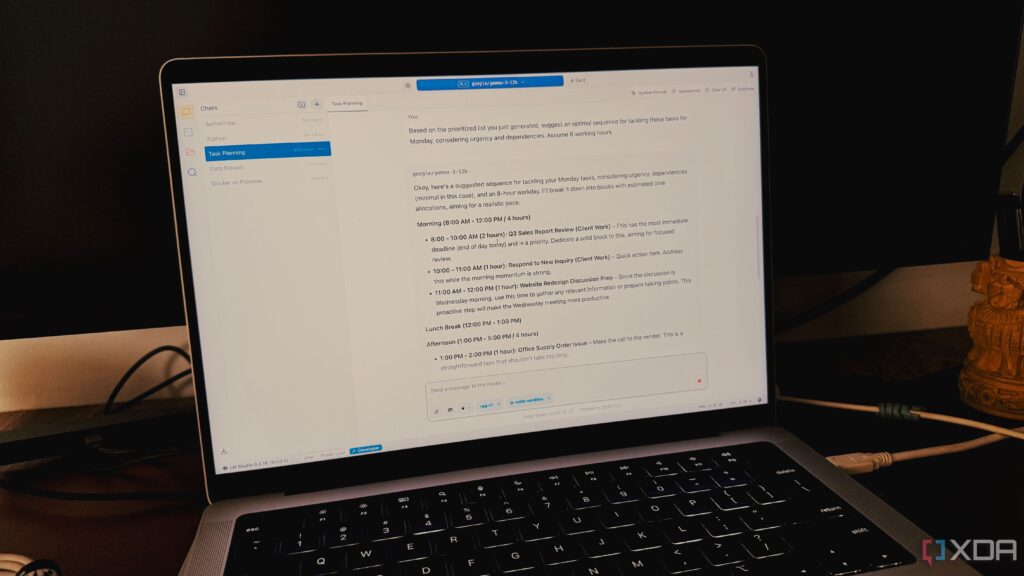

In my own experience, I began experimenting with this hybrid approach to capture the advantages of both environments. Initially, I employed the local LLM to gather foundational knowledge on complex topics, such as self-hosting applications using Docker. The local model, leveraging a 20 billion parameter variant of an OpenAI model, quickly produced a structured overview encompassing essential security practices, networking fundamentals, and best practices.

Seamless Integration for Maximum Efficiency

Once the local LLM generated the initial overview, I integrated it into my NotebookLM project. This step was crucial, as it allowed me to treat the generated content as a credible source. With this structured foundation in place, I could ask NotebookLM nuanced questions, such as, “What essential components are necessary for successful self-hosting applications using Docker?” The answers came swiftly, streamlining the research process.

Additionally, the system’s audio overview generation feature provided a significant time-saving advantage. By simply clicking the “Audio Overview” button, I received a personalized summary of my research, transforming it into a podcast-like experience that I could listen to while multitasking.

Another standout feature is the source checking and citation capability. As I navigated through my project, NotebookLM efficiently linked facts to their original sources, reducing the time spent verifying information from hours to mere minutes. This integration eliminated the hassle of manual fact-checking and enhanced overall accuracy.

Since adopting this dual approach, my research methodology has transformed. What initially promised to be a slight improvement has instead revolutionized my workflow, allowing me to go beyond the limitations of relying solely on cloud-based or local tools.

For those dedicated to maximizing productivity while maintaining control over their data, this integration of local LLMs with NotebookLM offers a new framework for effective research. As more users explore these possibilities, the potential for enhanced workflows appears boundless.

For further insights into optimizing productivity with local LLMs, I invite readers to explore additional resources and case studies available online.