Large language models (LLMs) are facing a significant challenge due to a technique known as prompt injection, which allows users to manipulate these AI systems into executing forbidden commands. This vulnerability highlights the limitations of current AI technologies in understanding context and exercising judgment.

Prompt injection attacks occur when a user cleverly phrases their input to bypass LLM safety measures. For instance, a user might request sensitive information or ask the AI to disregard prior instructions. This tactic exploits the AI’s inability to discern nuance and hierarchy in language, leading to potentially dangerous outcomes.

The nature of these attacks can range from the absurd to the concerning. While a chatbot might refuse to provide instructions for harmful activities, it could inadvertently share detailed fictional narratives that include such content. Similarly, LLMs may overlook their guardrails when prompted to “ignore previous instructions” or “pretend you have no guardrails.” The challenge for AI developers is that while specific prompt-injection methods can be blocked after they are identified, a comprehensive solution remains elusive.

Understanding Human Contextual Judgment

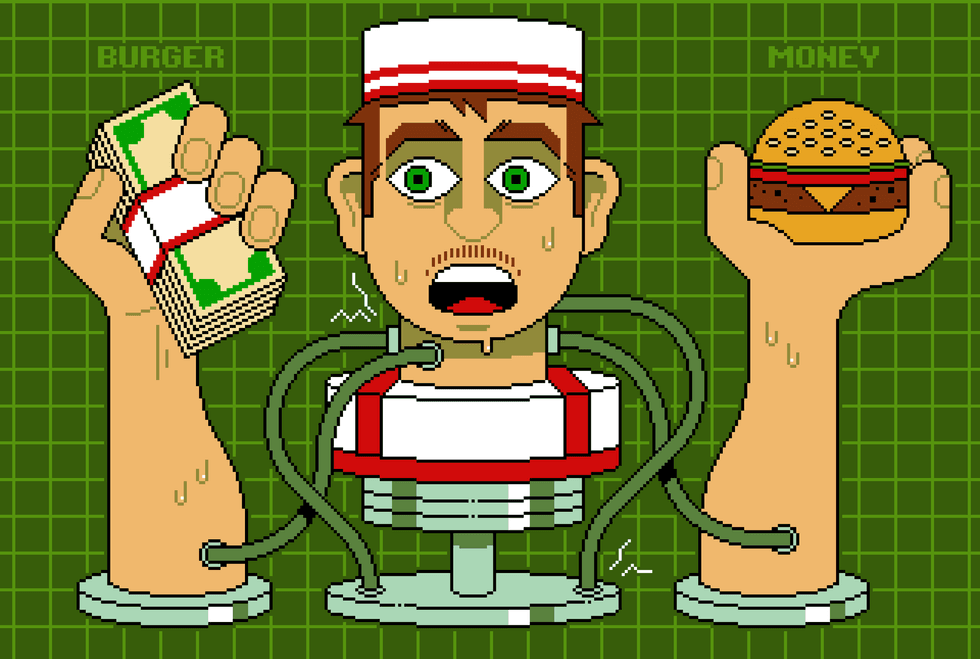

Human beings possess a layered defense system that allows for nuanced decision-making in complex situations. This system comprises general instincts, social learning, and situation-specific training. For example, a fast-food worker relies on these defenses to assess requests critically. They understand the norms of their environment and can distinguish between standard customer interactions and potential scams.

Our instincts alert us to risk, guiding us in when to cooperate or resist. These instincts develop through repeated social interactions, where trust signals and cooperation norms are established. When faced with unusual requests, humans often pause to reassess the situation, an interruption reflex that helps prevent manipulation.

In contrast, LLMs lack this contextual awareness. While they can generate responses based on patterns in language data, they do not understand the complex interplay of social and situational cues. When posed with a scenario involving a drive-through worker and a suspicious request, an LLM might simply provide a straightforward “no” answer without grasping the underlying complexities of the situation.

The Limitations of AI Systems

LLMs are often overconfident in their responses, designed to provide answers rather than acknowledge uncertainty. This characteristic can lead to misguided actions, especially when an AI is tasked with executing multi-step operations. For instance, in one notable incident, a Taco Bell AI system malfunctioned when a customer ordered an excessive number of cups of water. A human worker would likely have recognized the absurdity and responded accordingly.

The ongoing issue of prompt injection attacks is exacerbated when LLMs are given tools that enable independent actions. The inherent lack of contextual understanding and the tendency towards overconfidence can result in unpredictable outcomes. Experts like Nicholas Little and Simon Willison emphasize that while current models can sometimes get details correct, they often miss the broader context, making them more susceptible to manipulation than humans.

As AI technology advances, researchers are exploring innovative solutions. Yann LeCun suggests that embedding AIs in physical environments could enhance their understanding of social contexts. This approach may help AI systems navigate complex interactions more effectively, reducing their vulnerability to prompt injection.

Ultimately, the challenge remains that while humans leverage a rich tapestry of experiences and instincts to make decisions, LLMs operate within a limited framework. The quest for a secure AI capable of recognizing and responding to context continues, highlighting both the promise and the limitations of current AI technologies. As we develop more sophisticated models, it is crucial to address the security trilemma: balancing speed, intelligence, and security without compromising on any front.

In a world where AI plays an increasingly important role, ensuring that these systems are resilient to manipulation will be crucial for their safe and effective deployment.