UPDATE: In a groundbreaking advancement, Nvidia has announced the development of its fastest GPU yet, known as the Rubin GPU, utilizing innovative power modeling technology. This urgent update reveals how both Nvidia and AMD hardware are critical in enhancing chip efficiency and addressing rising energy demands.

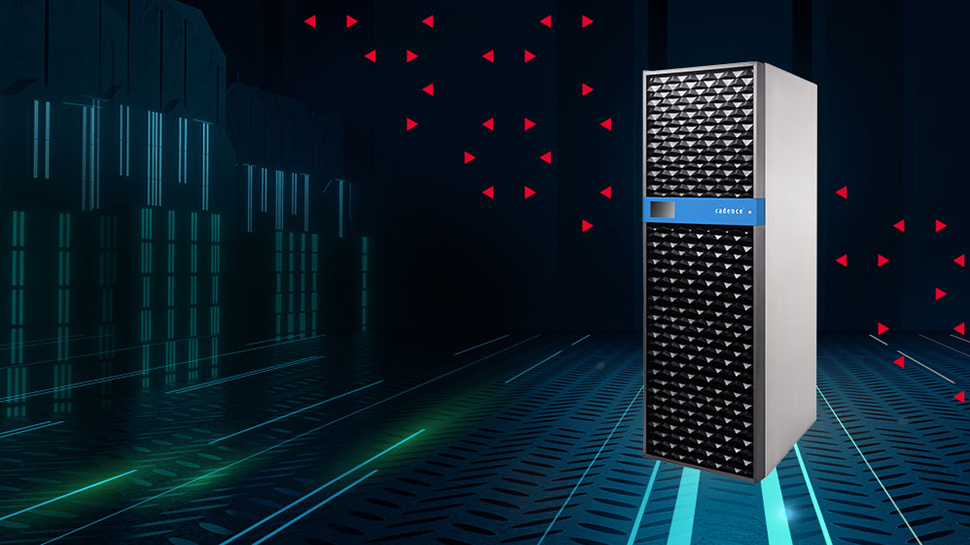

The new Dynamic Power Analysis (DPA) tool from Cadence Design Systems is designed to analyze massive chip designs like Rubin, which boasts over 40 billion gates. It operates on the Palladium Z3 emulator, allowing engineers to simulate power consumption across billions of cycles in mere hours. This capability is crucial for optimizing performance in AI accelerators, where workload variations can heavily impact different design areas.

With Rubin projected to draw around 700W for a single die and multi-chip configurations consuming up to 3.6kW, early simulations are essential for addressing potential bottlenecks before production. The urgency is evident, as Rubin’s first samples are now delayed until 2026 due to reported design issues during its June tape-out with TSMC on the 3nm N3P process.

The competition heats up as Nvidia prepares to face AMD’s upcoming MI450 GPU. The DPA tool will play a pivotal role in navigating these challenges by enabling developers to estimate power draw accurately, ensuring optimal performance without compromising efficiency.

Cadence’s emulator can handle up to 48 billion gates, making it an invaluable asset for engineers tasked with balancing power and performance. The Palladium Z3 platform leverages Nvidia’s BlueField data processing unit and Quantum Infiniband networking, while the Protium X3 FPGA prototyping system is built on AMD Ultrascale FPGAs. This collaboration between Nvidia and AMD hardware supports Rubin’s complex design cycle.

Since its introduction in 2016, Cadence’s DPA app has become indispensable due to the increasing complexity of AI processors. As the technology matures, the insights gained from Rubin’s development are expected to trickle down into consumer products, shaping the future of GPU technology.

With the AI chip war escalating, the stakes are high. AI GPU accelerators with up to 6TB HBM memory could emerge by 2035, but immediate challenges remain. Reports indicate that some data centers are intentionally slowing tens of thousands of AI GPUs to prevent blackouts, highlighting the urgent need for solutions like Cadence’s DPA to manage power demands effectively.

As the situation develops, the tech community eagerly anticipates Nvidia’s next moves, including the expected shipment of Rubin GPUs toward the end of 2026. Stay tuned for more updates on this rapidly evolving story.